Build a conversational AI app with React Native and Flow

Learn how to create a mobile application that integrates Speechmatics Flow service using React Native. This guide demonstrates how to build the app using the Expo framework, implementing real-time audio communication with Flow's servers.

;

;Prerequisites

Before getting started, ensure you have:

- Node.js (LTS) installed on your system

- Development environment configured for development builds:

Project Setup

Start by creating a fresh Expo project:

npx create-expo-app@latest

To remove the example code and start with a clean slate:

npm run reset-project

This command preserves the example files by moving them to an 'app-example' directory while creating a new clean app directory. You can safely remove the 'app-example' directory if you don't need it for reference.

Essential Dependencies

Install the following packages to enable Flow integration and audio handling:

# React version of Flow client

npm i @speechmatics/flow-client-react

# Polyfill for the EventTarget class

npm i event-target-polyfill

# Expo native module to handle audio

npm i @speechmatics/expo-two-way-audio

# Just for the purpose of this example. See comment in the code above `createSpeechmaticsJWT`

npm i @speechmatics/auth

The Flow client uses EventTarget which is typically available in browsers but not in react native.

For that reason we've installed the polyfill: event-target-polyfill.

Building the User Interface

Let's create a minimal user interface.

Start by clearing the app/ directory and creating a new index.tsx file with a basic UI structure:

1import { useState } from "react";

2import { Button, StyleSheet, View, Text } from "react-native";

3

4export default function Index() {

5 const [isConnecting, setIsConnecting] = useState(false);

6 const [isConnected, setIsConnected] = useState(false);

7 return (

8 <View style={styles.container}>

9 <Text>Talk to Flow!</Text>

10 <Button

11 title={isConnected ? "Disconnect" : "Connect"}

12 disabled={isConnecting}

13 />

14 </View>

15 );

16}

17

18const styles = StyleSheet.create({

19 container: {

20 flex: 1,

21 justifyContent: "center",

22 alignItems: "center",

23 },

24});

25The view above will just render a Connect/Disconnect button that won't do anything yet.

Let's run it on the simulator to see how it looks:

# For iOS simulator

npx expo run ios

# For Android emulator

npx expo run android

This will launch the Metro Bundler and show up some options. If it shows the following: Using Expo Go we need to switch to a development build. This can be done by pressing s. Then press r to reload the app. Some features that we are going to include, like the native module for handling audio, don't work properly in Expo Go.

Implementing Flow Connection

It's time to add some functionality to this example.

We'll start by implementing the connect and disconnect logic.

For that we are going to use the @speechmatics/flow-client-react package.

Our /app/index.tsx file should look as follows:

1// The polyfill should be the first import in the app

2import "event-target-polyfill";

3import { Button, StyleSheet, View, Text } from "react-native";

4import { FlowProvider, useFlow } from "@speechmatics/flow-client-react";

5

6import { createSpeechmaticsJWT } from "@speechmatics/auth";

7

8export default function Index() {

9 return (

10 <FlowProvider

11 appId="react-native-flow-guide"

12 websocketBinaryType="arraybuffer"

13 >

14 <Flow />

15 </FlowProvider>

16 );

17}

18

19function Flow() {

20 const { startConversation, endConversation, sendAudio, socketState } =

21 useFlow();

22

23 const obtainJwt = async () => {

24 const apiKey = process.env.EXPO_PUBLIC_SPEECHMATICS_API_KEY;

25 if (!apiKey) {

26 throw new Error("API key not found");

27 }

28 // WARNING: This is just an example app.

29 // In a real app you should obtain the JWT from your server.

30 // For example, `createSpeechmaticsJWT` could be used on a server running JS.

31 // Otherwise, you will expose your API key to the client.

32 return await createSpeechmaticsJWT({

33 type: "flow",

34 apiKey,

35 ttl: 60,

36 });

37 };

38

39 const handleToggleConnect = async () => {

40 if (socketState === "open") {

41 endConversation();

42 } else {

43 try {

44 const jwt = await obtainJwt();

45 await startConversation(jwt, {

46 config: {

47 template_id: "flow-service-assistant-humphrey",

48 template_variables: {

49 timezone: "Europe/London",

50 },

51 },

52 });

53 } catch (e) {

54 console.log("Error connecting to Flow: ", e);

55 }

56 }

57 };

58

59 return (

60 <View style={styles.container}>

61 <Text>Talk to Flow!</Text>

62 <Button

63 title={socketState === "open" ? "Disconnect" : "Connect"}

64 disabled={socketState === "connecting" || socketState === "closing"}

65 onPress={handleToggleConnect}

66 />

67 </View>

68 );

69}

70

71const styles = StyleSheet.create({

72 container: {

73 flex: 1,

74 justifyContent: "center",

75 alignItems: "center",

76 },

77});

78In the code above, we are injecting the API key from an environment variable.

To make the environment variable available, let's create a .env file in the root directory of the project with the following content:

EXPO_PUBLIC_SPEECHMATICS_API_KEY='YOUR_API_KEY_GOES_HERE'

API keys can be obtained from the Speechmatics User Portal

Audio Integration

The final step is implementing two-way audio communication. This involves three crucial tasks:

- Microphone input capture in PCM format

- Speaker output routing for Flow responses

- Acoustic Echo Cancellation (AEC) to prevent audio feedback

We'll use the Speechmatics Expo Two Way Audio module to handle these requirements efficiently.

In order to allow microphone access we need to add some configuration to the app.json file in the root of our project.

For iOS we add an infoPlist entry and for Android a permissions entry.

{

"expo": {

...

"ios": {

"infoPlist": {

"NSMicrophoneUsageDescription": "Allow Speechmatics to access your microphone"

},

...

},

"android": {

"permissions": ["RECORD_AUDIO", "MODIFY_AUDIO_SETTINGS"],

...

}

}

...

}

Now we will update the code to handle these microphone adjustments. Our /app/index.tsx file should look as follows:

1// The polyfill should be the first import in the whole app

2import "event-target-polyfill";

3import { useCallback, useEffect, useState } from "react";

4import { Button, StyleSheet, View, Text } from "react-native";

5import {

6 FlowProvider,

7 useFlow,

8 useFlowEventListener,

9} from "@speechmatics/flow-client-react";

10

11import { createSpeechmaticsJWT } from "@speechmatics/auth";

12

13import {

14 type MicrophoneDataCallback,

15 initialize,

16 playPCMData,

17 toggleRecording,

18 useExpoTwoWayAudioEventListener,

19 useIsRecording,

20 useMicrophonePermissions,

21} from "@speechmatics/expo-two-way-audio";

22

23export default function Index() {

24 const [micPermission, requestMicPermission] = useMicrophonePermissions();

25 if (!micPermission?.granted) {

26 return (

27 <View style={styles.container}>

28 <Text>Mic permission: {micPermission?.status}</Text>

29 <Button

30 title={

31 micPermission?.canAskAgain

32 ? "Request permission"

33 : "Cannot request permissions"

34 }

35 disabled={!micPermission?.canAskAgain}

36 onPress={requestMicPermission}

37 />

38 </View>

39 );

40 }

41 return (

42 <FlowProvider

43 appId="react-native-flow-guide"

44 websocketBinaryType="arraybuffer"

45 >

46 <Flow />

47 </FlowProvider>

48 );

49}

50

51function Flow() {

52 const [audioInitialized, setAudioInitialized] = useState(false);

53 const { startConversation, endConversation, sendAudio, socketState } =

54 useFlow();

55 const isRecording = useIsRecording();

56

57 // Initialize Expo Two Way Audio

58 useEffect(() => {

59 const initializeAudio = async () => {

60 await initialize();

61 setAudioInitialized(true);

62 };

63

64 initializeAudio();

65 }, []);

66

67 // Setup a handler for the "agentAudio" event from Flow API

68 useFlowEventListener("agentAudio", (audio) => {

69 // Even though Int16Array is a more natural representation for PCM16_sle,

70 // Expo Modules API uses a convertible type for arrays of bytes and it needs Uint8Array in the JS side.

71 // This is converted to a `Data` type in Swift and to a `kotlin.ByteArray` in Kotlin.

72 // More info here: https://docs.expo.dev/modules/module-api/#convertibles

73 // For this reason, the Expo Two Way Audio library requires a Uint8Array argument for the `playPCMData` function.

74 const byteArray = new Uint8Array(audio.data.buffer);

75 playPCMData(byteArray);

76 });

77

78 // Setup a handler for the "onMicrophoneData" event from Expo Two Way Audio module

79 useExpoTwoWayAudioEventListener(

80 "onMicrophoneData",

81 useCallback<MicrophoneDataCallback>(

82 (event) => {

83 // We send the audio bytes to the Flow API

84 sendAudio(event.data.buffer);

85 },

86 [sendAudio],

87 ),

88 );

89

90 const obtainJwt = async () => {

91 const apiKey = process.env.EXPO_PUBLIC_SPEECHMATICS_API_KEY;

92 if (!apiKey) {

93 throw new Error("API key not found");

94 }

95 // WARNING: This is just an example app.

96 // In a real app you should obtain the JWT from your server.

97 // `createSpeechmaticsJWT` could be used on a server running JS.

98 // Otherwise, you will expose your API key to the client.

99 return await createSpeechmaticsJWT({

100 type: "flow",

101 apiKey,

102 ttl: 60,

103 });

104 };

105

106 const handleToggleConnect = async () => {

107 if (socketState === "open") {

108 endConversation();

109 } else {

110 try {

111 const jwt = await obtainJwt();

112 await startConversation(jwt, {

113 config: {

114 template_id: "flow-service-assistant-humphrey",

115 template_variables: {

116 timezone: "Europe/London",

117 },

118 },

119 });

120 } catch (e) {

121 console.log("Error connecting to Flow: ", e);

122 }

123 }

124 };

125

126 // Handle clicks to the 'Mute/Unmute' button

127 const handleToggleMute = useCallback(() => {

128 toggleRecording(!isRecording);

129 }, [isRecording]);

130

131 return (

132 <View style={styles.container}>

133 <Text>

134 {socketState === "open"

135 ? isRecording

136 ? "Try saying something to Flow"

137 : "Tap 'Unmute' to start talking to Flow"

138 : "Tap 'Connect' to connect to Flow"}

139 </Text>

140 <Button

141 title={socketState === "open" ? "Disconnect" : "Connect"}

142 disabled={socketState === "connecting" || socketState === "closing"}

143 onPress={handleToggleConnect}

144 />

145 <Button

146 title={isRecording ? "Mute" : "Unmute"}

147 disabled={socketState !== "open" || !audioInitialized}

148 onPress={handleToggleMute}

149 />

150 </View>

151 );

152}

153

154const styles = StyleSheet.create({

155 container: {

156 flex: 1,

157 justifyContent: "center",

158 alignItems: "center",

159 },

160});

161Since we've introduced some app configuration changes in app.json, let's build the app from scratch (cleaning the ios or android folders):

# iOS

npx expo prebuild --clean -p ios

npx expo run:ios

# Android

npx expo prebuild --clean -p android

npx expo run:android

Our app can now unmute the microphone to send audio samples to the Flow server. Audio messages received from the Flow server will be played back through the speaker.

While simulators are great for initial testing, features like Acoustic Echo Cancellation require physical devices for proper functionality. If you find that the simulator audio support is not working or is not good enough we strongly recommend testing on physical devices.

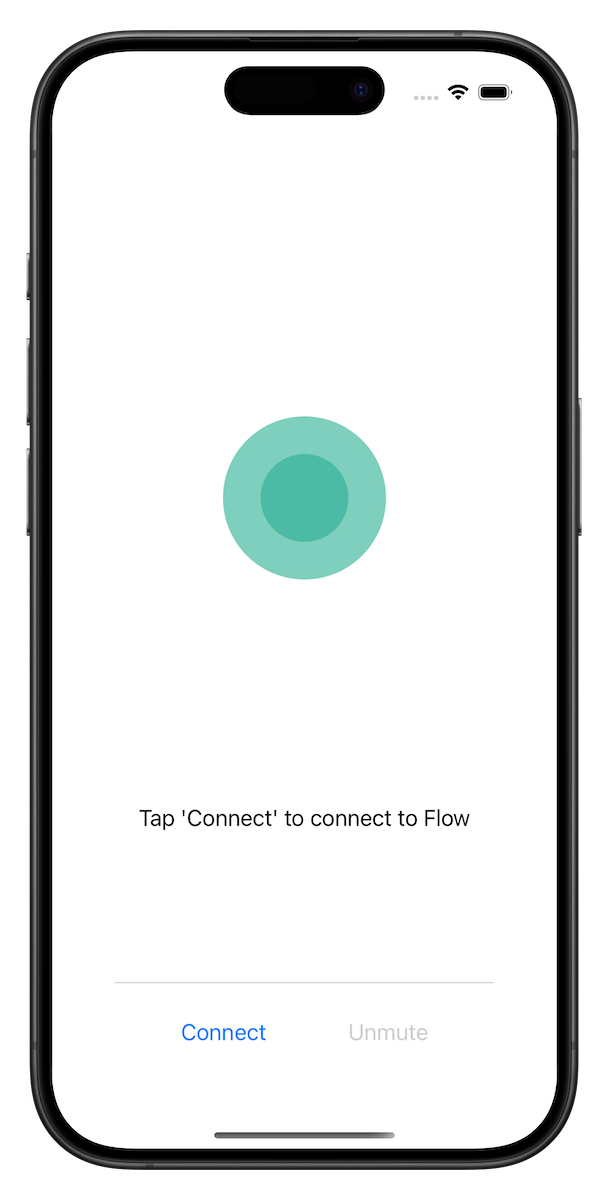

Volume Indicators

To enhance our UI, we'll add volume indicators for both the microphone and speaker, and organize our buttons into a "bottom bar."

Design Overview

- Volume Indicators: These will consist of two concentric circles:

- Outer Circle: Represents the speaker volume.

- Inner Circle: Represents the microphone volume.

- Animation: The circles will animate to grow or shrink based on the current volume level.

Implementation Details

We'll use the react-native-reanimated library to handle the animations.

This library is often included by default in Expo apps, but if it's not, you can follow the installation instructions here.

To keep things organised, let’s create a new file inside the app folder to house our custom volume indicator component.

/app/volume-display.tsx

import { StyleSheet, View } from "react-native";

import Animated, {

Easing,

interpolate,

useAnimatedStyle,

withTiming,

type SharedValue,

} from "react-native-reanimated";

export default function VolumeDisplay({

volumeLevel,

color,

minSize,

maxSize,

}: {

volumeLevel: SharedValue<number>;

color: string;

minSize: number;

maxSize: number;

}) {

const animatedStyle = useAnimatedStyle(() => {

const size = interpolate(volumeLevel.value, [0, 1], [minSize, maxSize]);

return {

width: withTiming(size, {

duration: 100,

easing: Easing.linear,

}),

height: withTiming(size, {

duration: 100,

easing: Easing.linear,

}),

borderRadius: withTiming(size / 2, {

duration: 100,

easing: Easing.linear,

}),

};

});

return (

<View style={styles.container}>

<Animated.View style={[{ backgroundColor: color }, animatedStyle]} />

</View>

);

}

const styles = StyleSheet.create({

container: {

justifyContent: "center",

alignItems: "center",

},

});

Next, we’ll integrate our volume indicator component into the app.

Our /app/index.tsx file should look as follows:

// The polyfill should be the first import in the whole app

import "event-target-polyfill";

import { useCallback, useEffect, useState } from "react";

import { Button, StyleSheet, View, Text } from "react-native";

import {

FlowProvider,

useFlow,

useFlowEventListener,

} from "@speechmatics/flow-client-react";

import { createSpeechmaticsJWT } from "@speechmatics/auth";

import {

type MicrophoneDataCallback,

type VolumeLevelCallback,

initialize,

playPCMData,

toggleRecording,

useExpoTwoWayAudioEventListener,

useIsRecording,

useMicrophonePermissions,

} from "@speechmatics/expo-two-way-audio";

import { useSharedValue } from "react-native-reanimated";

import VolumeDisplay from "./volume-display";

export default function Index() {

const [micPermission, requestMicPermission] = useMicrophonePermissions();

if (!micPermission?.granted) {

return (

<View style={styles.container}>

<Text>Mic permission: {micPermission?.status}</Text>

<Button

title={

micPermission?.canAskAgain

? "Request permission"

: "Cannot request permissions"

}

disabled={!micPermission?.canAskAgain}

onPress={requestMicPermission}

/>

</View>

);

}

return (

<FlowProvider

appId="react-native-flow-guide"

websocketBinaryType="arraybuffer"

>

<Flow />

</FlowProvider>

);

}

function Flow() {

const [audioInitialized, setAudioInitialized] = useState(false);

const inputVolumeLevel = useSharedValue(0.0);

const outputVolumeLevel = useSharedValue(0.0);

const { startConversation, endConversation, sendAudio, socketState } =

useFlow();

const isRecording = useIsRecording();

// Initialize Expo Two Way Audio

useEffect(() => {

const initializeAudio = async () => {

await initialize();

setAudioInitialized(true);

};

initializeAudio();

}, []);

// Setup a handler for the "agentAudio" event from Flow API

useFlowEventListener("agentAudio", (audio) => {

// Even though Int16Array is a more natural representation for PCM16_sle,

// Expo Modules API uses a convertible type for arrays of bytes and it needs Uint8Array in the JS side.

// This is converted to a `Data` type in Swift and to a `kotlin.ByteArray` in Kotlin.

// More info here: https://docs.expo.dev/modules/module-api/#convertibles

// For this reason, the Expo Two Way Audio library requires a Uint8Array argument for the `playPCMData` function.

const byteArray = new Uint8Array(audio.data.buffer);

playPCMData(byteArray);

});

// Setup a handler for the "onMicrophoneData" event from Expo Two Way Audio module

useExpoTwoWayAudioEventListener(

"onMicrophoneData",

useCallback<MicrophoneDataCallback>(

(event) => {

// We send the audio bytes to the Flow API

sendAudio(event.data.buffer);

},

[sendAudio]

)

);

const obtainJwt = async () => {

const apiKey = process.env.EXPO_PUBLIC_SPEECHMATICS_API_KEY;

if (!apiKey) {

throw new Error("API key not found");

}

// WARNING: This is just an example app.

// In a real app you should obtain the JWT from your server.

// `createSpeechmaticsJWT` could be used on a server running JS.

// Otherwise, you will expose your API key to the client.

return await createSpeechmaticsJWT({

type: "flow",

apiKey,

ttl: 60,

});

};

const handleToggleConnect = async () => {

if (socketState === "open") {

endConversation();

} else {

try {

const jwt = await obtainJwt();

await startConversation(jwt, {

config: {

template_id: "flow-service-assistant-humphrey",

template_variables: {

timezone: "Europe/London",

},

},

});

} catch (e) {

console.log("Error connecting to Flow: ", e);

}

}

};

// Handle clicks to the 'Mute/Unmute' button

const handleToggleMute = useCallback(() => {

toggleRecording(!isRecording);

}, [isRecording]);

// Setup a handler for the "onInputVolumeLevelData" event from Expo Two Way Audio module.

// This event is triggered when the input volume level changes.

useExpoTwoWayAudioEventListener(

"onInputVolumeLevelData",

useCallback<VolumeLevelCallback>(

(event) => {

// We update the react-native-reanimated shared value for the input volume (microphone).

// This allow us to create animations for the microphone volume based on this shared value.

inputVolumeLevel.value = event.data;

},

[inputVolumeLevel]

)

);

// Setup a handler for the "onOutputVolumeLevelData" event from Expo Two Way Audio module.

// This event is triggered when the output volume level changes.

useExpoTwoWayAudioEventListener(

"onOutputVolumeLevelData",

useCallback<VolumeLevelCallback>(

(event) => {

// We update the react-native-reanimated shared value for the output volume (speaker).

// This allow us to create animations for the speaker volume based on this shared value.

outputVolumeLevel.value = event.data;

},

[outputVolumeLevel]

)

);

return (

<View style={styles.container}>

<View style={styles.VolumeDisplayContainer}>

<View style={styles.volumeDisplay}>

<VolumeDisplay

volumeLevel={outputVolumeLevel}

color="#7ECFBD"

minSize={130}

maxSize={180}

/>

</View>

<View style={styles.volumeDisplay}>

<VolumeDisplay

volumeLevel={inputVolumeLevel}

color="#4CBBA5"

minSize={70}

maxSize={120}

/>

</View>

</View>

<View>

<Text style={{ fontSize: 18 }}>

{socketState === "open"

? isRecording

? "Try saying something to Flow"

: "Tap 'Unmute' to start talking to Flow"

: "Tap 'Connect' to connect to Flow"}

</Text>

</View>

<View style={styles.bottomBar}>

<View style={styles.buttonContainer}>

<Button

title={socketState === "open" ? "Disconnect" : "Connect"}

disabled={socketState === "connecting" || socketState === "closing"}

onPress={handleToggleConnect}

/>

<Button

title={isRecording ? "Mute" : "Unmute"}

disabled={socketState !== "open" || !audioInitialized}

onPress={handleToggleMute}

/>

</View>

</View>

</View>

);

}

const styles = StyleSheet.create({

container: {

flex: 1,

alignItems: "center",

justifyContent: "space-evenly",

padding: 50,

},

buttonContainer: {

flexDirection: "row",

justifyContent: "space-around",

width: "100%",

marginBottom: 20,

},

bottomBar: {

position: "absolute",

bottom: 0,

width: "100%",

padding: 20,

borderTopWidth: 1,

borderTopColor: "lightgray",

},

VolumeDisplayContainer: {

position: "relative",

width: 150,

height: 150,

alignItems: "center",

justifyContent: "center",

},

volumeDisplay: {

position: "absolute",

},

});

We have successfully completed our conversational AI application! You can connect to Flow services and unmute the microphone to start a conversation.

Testing on Physical Devices

To deploy to real devices, first configure the development environment for using local builds with physical devices:

Then run the following command:

# For iOS devices

npx expo run:ios --device --configuration Release

# For Android devices

npx expo run:android --device --variant release

Additional resources

Dive deeper into the tools used in this guide: